In Part 1 of this series-within-a-series, we looked at the requirements for quality by design in E6 and E8. In Part 2, we looked at some supporting tools from Transcelerate, which were a bit general. In Part 3 we look at CTTI's toolkit, which includes an overview, training materials, a Principles document, workshop tools, and many more.

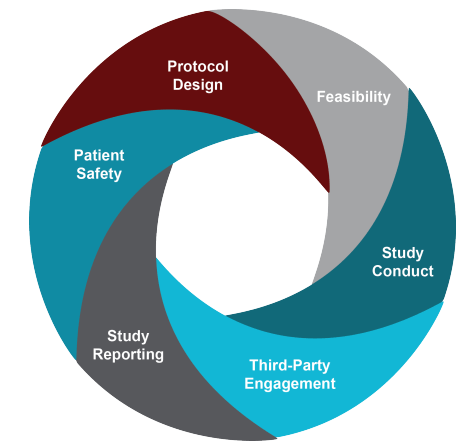

CTTI has some simple, effective materials for presenting the basic concept of quality by design. As with Transcelerate's tools, however, the presentation of critical-to-quality factors is extremely broad. CTTI has developed this graphic to help teams assess critical-to-quality factors:

Of these categories, "patient safety" is the only true quality factor; protocol design, feasibility, study conduct, third-party engagement, and study reporting are all (extremely broad!) processes.

CTTI has published a Principles Document to help teams make selections within these broad categories. The company has helpfully added a bold footer to each and every page of this 24-page document: "REMINDER: This document is intended to be a discussion tool, not to serve as a checklist." We are grateful for their attempt to save us, but we anticipate that there are plenty of companies using this as a checklist.

The Principles document includes more narrowly-focused critical-to-quality factors such as eligibility criteria, randomization, informed consent, withdrawal criteria, and stopping rules. It also includes more broad processes such as as training, statistical analysis, and data monitoring and management. Each factor or process has a description, considerations in evaluating the importance of the factor, and examples to consider in evaluating risks. For example, to determine the importance of Training as a critical-to-quality factor, we are invited to consider the following:

- For what critical activities are focused and/or targeted training necessary?

- Consider any study-specific assessments for which staff must be certified vs. trained.

- Will roll-in trial participants be used at sites? How many? How will these trial participants contribute to the overall findings of the study?

- How might human factors play a role in the intended use of the investigational product?

These are good operational questions, but...is Training a critical-to-quality factor, or is it a mitigation measure to address risks to the critical-to-quality factors that are implied, but not stated outright, in these questions? Wouldn't the critical-to-quality factor be the critical activity that would require targeted training? Or the study-specific assessments that require training? Or the human factors that might prevent participants from administering IMP correctly?

It feels backwards to us - these tools encourage study teams to start with the mitigation measure and kind of feel our way to what might be the critical-to-quality factor. We suspect that any team that used this tool (NOT A CHECKLIST!) for discussion would get distracted by factors that are not, ultimately, critical to quality.

The tool also is not helpful in terms of articulating risks. Let's imagine that we had identified a critical process as a result of our discussion on training - for example, participants are likely to make errors when administering the IMP. We are invited to consider the following questions:

- Could delivery of training be tailored dependent on the topic and the audience?

- Are the steps required to achieve any required certification clearly described in the protocol/investigational plan?

- Is it feasible to test the effectiveness of training?

Again, all great questions to consider once you've identified a risk and settled on training as a way of addressing that risk, but where is the risk discussion?

It's not that the tool isn't useful, but we think it's likely to cause confusion by muddling critical-to-quality factors, risks, and mitigations. In our next blog post, we'll propose a simpler method of conveying these concepts.