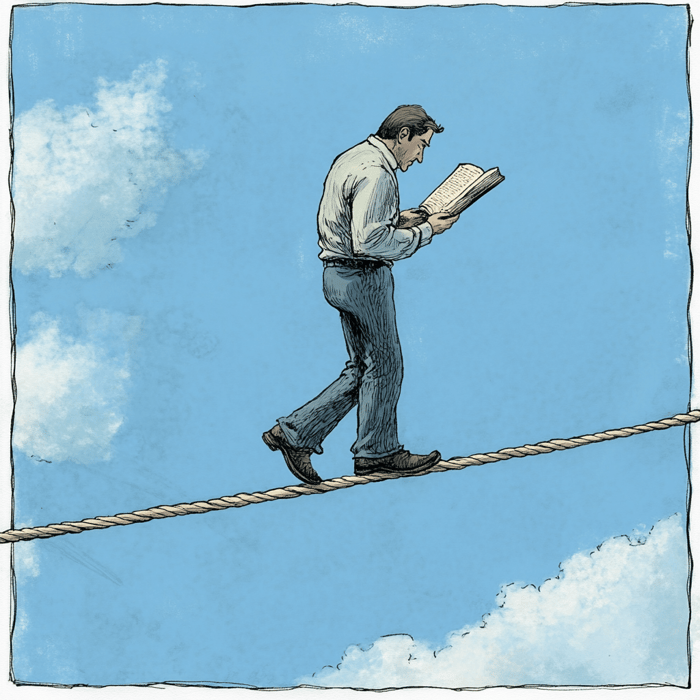

The third entry in our Risk Management Book Club, Thinking, Fast and Slow (Farrar, Straus and Giroux; 2013) was written by Daniel Kahneman, a psychologist who won a Nobel Prize in economics for challenging the concept that humans in the economic market always behave rationally. In this book, Kahneman posits two types of human thought processes: "fast" thinking, which his intuitive, subjective, and impressionable; and "slow" thinking, which is more deliberate, evidence-based, and analytical.

Kahneman's research makes a strong case that even "slow" thinking is heavily influenced by emotion. Study after study suggests that decision-making is biased by anchoring, which means starting a discussion or negotiation at a particular point; priming, which is the influence of a suggestive word or visual cue; and availability, which is the weight given to the known or easily accessible over that which we have to work harder to know. At the same time, we tend to plan over-optimistically, be overconfident in our decisions, and ignore basic statistics that could inform decision-making in favor of intuition.

In other words, people are lazy thinkers. As Kahneman puts it,

"When faced with a difficult question, we often answer an easier one instead, usually without noticing the substitution."What does this have to do with risk management? In addition to formal risk management procedures, we in clinical research frequently evaluate options and make decisions that increase or decrease risk. For example, every clinical trial starts with selections of vendors, sites, and site monitors, decisions that are so important that we must demonstrate how we made them during regulatory inspections. How often do we weigh evidence and make a rational decision, versus relying - as the kids say - on vibes?

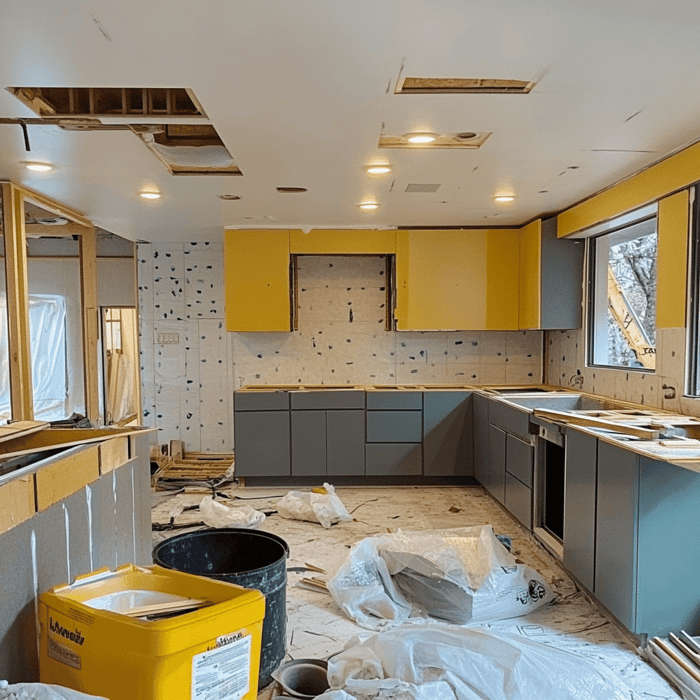

Kahneman cites studies that show that even a simple algorithm, if validated, performs better at decision-making than human intuition. For example, we've all worked with non-compliant sites that we've struggled to bring into compliance. Sometimes we invest months of our time - increased monitoring, medical monitor visits, audits, investigations, CAPAs - before concluding that the site is a liability (or, worse, we just run out the clock until the study's over). If over the years we had collected data on the point at which a non-compliant clinical site is no longer worth the investment, we could use that information to terminate problem sites before they become too big or a problem. Instead, when faced with a non-compliant site, all the biased thinking described by Kaheman comes into play. We're overconfident - we're sure the site will do better. We're subjective - we are influenced by the PI's charm, or by the reactions of other team members who are discussing the site. We cling to the sunk cost fallacy, which tells us we've spent so much time and effort already, that it would be a waste to abandon it now.

Kahneman has some useful tips for avoiding overconfidence and bias:

Data gathering. To avoid overoptimistic planning, Kahneman suggests gathering historical data to help anchor plans in reality. For example, instead of asking the study team how long it will take from database lock to top-line analysis, a project manager would review timelines from previous similar studies and push back if the team's plan is too close to the best-case scenario.

Premortem. To encourage realistic risk assessment, Kahneman suggests that teams engage in this thought experiment: "Imagine we've proceeded with this plan, it's a year down the road, and it's been a disaster. What happened?" Forcing the team to consider potential negative outcomes give them permission to set aside "happy path" thinking.

Influence. To avoid being influenced by other's judgments during decision-making, he recommends having team members write down their position before any discussion occurs. This helps prevent team members from "falling into line" behind the first person who speaks.

Awareness. By being aware of these biases, we can deliberately "slow down" our thought processes. For example, a QA lead who understands that a study team is prone to jump to solutions during a quality investigation can insist they identify and investigate potential root causes before generating CAPAs.