The term “artificial intelligence” is half right. The first half.

So called AIs are indeed artificial. But intelligent? Hardly. Not even a little bit. Granted, they do an eerily good job of appearing intelligent, but it is only a simulacrum.

To understand this we need to first define what we mean by AI? For this article we will be narrowing our focus onto a subset of AIs called Large Language Models (LLMs). An LLM is a generative AI, in that it can generate language from a prompt. Other generative AIs may generate images or video or music, but LLMs generate text. Give an LLM a prompt and it will generate text in response.

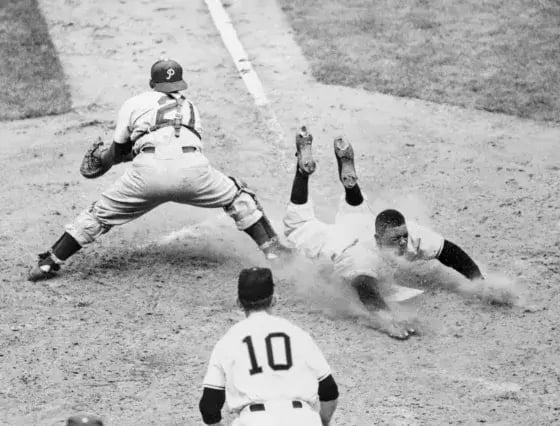

The text an LLM generates is, of course, not random. No one would be impressed if you prompted an LLM with the text, “Who was the greatest baseball player of all time?” and it replied, “Purple monkey dishwasher.” Instead, LLMs generate meaningful, grammatically correct responses to a prompt. For instance, when I asked ChatGPT (a well known LLM interface) the above question, it replied at great length, beginning with, “Determining the best baseball player of all time is subjective and often depends on the criteria used,” and continuing from there. That’s pretty impressive, despite the fact that it could simply have said, “Willie Mays.”

But don't be fooled. ChatGPT knows nothing about baseball. It doesn't even dip into some database where the answer might be stored. No, what ChatGPT is actually doing is choosing a series of words that may reasonably follow some other series of words. It's glorified type-ahead, much like what you’re used to when typing on your phone or in a modern word processor. That’s it. Full stop.

To pull off this party trick an AI needs a model of how language works. From the 1950s through the ‘80s, there were many attempts at modeling language via the creation of complex sets of rules that allowed a computer to work with vocabulary, grammar, and idiomatic usage. These met with little success.

Starting in the 1990s, however, as computing power and storage increased, a statistical approach to language modeling started to take hold. It would be better, engineers reasoned, if a language model could train itself. Such a system could be provided with a great deal of unstructured text and then draw inferences from the patterns it detects. In this scenario, if you were to train a model on, say, the contents of every US newspaper published since 1903, it would not only be able to parse most questions, it would do a pretty good job of generating a reasonable response.

Did you notice all the weasel words in the last sentence? Pretty good. Reasonable. This is because systems like this are not knowledge engines or databases, they are language models. And as previously stated, they are really only good at one thing: selecting a word or phrase that appears likely to follow a previous word or phrase, accuracy be damned.

Why is that? Let’s dig in.

Imagine, you were to feed an empty language model the following text: “Cats make good pets. Dogs also make good pets. Alligators make bad pets.”

In this case, the AI makes a note of the word “Cat.” (It may have simple stemming and stop-word recognition, so that it recognizes that “cats” is the plural of “cat.”) Later, it notes the word “good” and draws a weighted reference between the two.

cat → 75 → good

When it sees the word “pet,” it can make two references.

cat → 75 → good → 80 → pet

cat → 60 → pet

good → 80 → pet

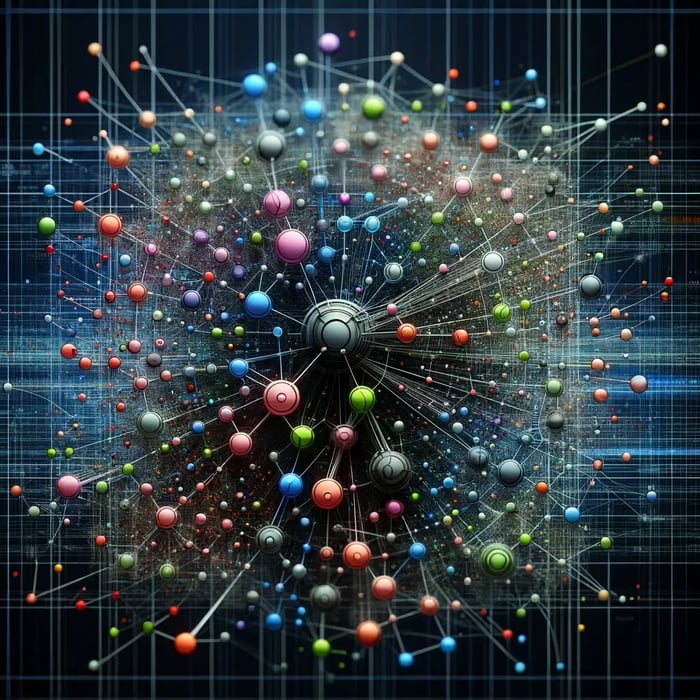

We can represent the same data differently:

In this rendering, each connection that a word has to another can be considered a “dimension” (left, right, up, down, diagonal) and the length of the arrow (near, far, very far) represents a weighted vector. Words that are frequently found next to each other in the text will be “next” to each other in this multi-dimensional representation.

Let’s keep going.

Here we can see that after parsing “Dogs also make good pets” that the word “good” got closer to “pets.” “I’ve seen ‘pet’ follow ‘good’ twice now,” thinks the AI, “maybe it’s a phrase,” and strengthens the link between them. In addition, dogs and cats are now also related, though slightly more distantly. And finally, dogs are pets, cats are pets, and both may be considered “good pets.”

I’ll let you draw in the vectors that map to “Alligators are bad pets.” But if we were to stop the training there, you would note that alligators are as likely to be a pet as dogs and cats, and that’s not right. The problem here is the size of the dataset. There isn’t enough text for the model to learn that “bad” and “good” are opposites, and that bad pets are very different from good pets. But given enough input, it would in the end “teach” itself how language (in this case English) looks. Not works, looks.

When sufficiently trained, you can imagine this model as a giant cube of words and their relationships to one another. In this cube “dogs” and “cats” are frequently very near each other. So too are “lions” and “cats.” But “lions” are somewhat further away from “pets” than “house cats” are. Occasionally, there’s a strongish relationship between “alligators” and “cats,” they’re both animals after all, but not as strong as the relationship between “alligators” and “dangerous.”

Our cat/dog/alligator model, however, is still very simple. Arguably capable of writing a very bad Magic Treehouse story, bit little else. So keep in mind that a real model will have sucked up all of Wikipedia, every out-of-copyright novel (and likely many still under copyright), every blog post, every news article, every corporate and government website, indeed everything that it can get its grubby little fingers on. This input, mind you, is not a collection of facts and cogent analyses—AIs don't care about facts. It’s phrasing and idioms and style and prejudices and lies, all repeated in different ways over and over and over again. It’s English! Or a rich facsimile thereof.

We can now consider asking the broadly trained AI to respond to the prompt, “Who won the World Series in 2021?” And like watching an MRI of a human brain, you can imagine a part of this multi-dimensional large language model lighting up. The part that is closest to the phrase “won world series 2021.” The AI then focuses on the lit up area of itself, looking for what words are nearby, and latches on to the strongly weighted word “Atlanta.” Recursively, it then looks to see what part of its “brain” is related to “won world series 2021 Atlanta” and picks “Braves.” After a little more algorithing like this, it generates the response, “The Atlanta Braves won the 2021 World Series, beating the Tampa Bay Rays four games to two.”

Wow!

Wait. What?

The knowledgeable reader may notice something unusual about that response. It’s wrong. The Braves didn’t beat the Rays, they beat the Houston Astros!

What’s going on here? How did the model get this “wrong?” The fact is, the model didn’t get anything wrong. It did exactly what it was supposed to do; generate a string of words that could reasonably follow another string of words. The model did not generate the response, “Yellow extra speed 21 like.” That doesn’t look reasonable. Instead it generated a plausible response. Wrong, but plausible.

Remember—always remember—that generative AIs are just pattern replicators. They are not knowledge engines. They don’t know anything. They only regurgitate words that are likely to follow other words. And it does this non-deterministically. That is, when presented with the same input it does not necessarily generate the same output. There is inherent randomness in the path it takes when generating a response.

Our example is admittedly contrived. It’s very likely that an actual LLM will always give the “right” answer. But it’s not 100%. At any one point, the model may skip over the vector that connects the terms “baseball,” “2021,” and “Braves” to “Houston,” and instead choose the weaker vector that connects these terms to “Tampa Bay” (who lost the 2020 World Series to the Dodgers, four games to two).

Most actual models give the user the ability to dial the randomness up and down (but not off). This dial is called temperature. With ChatGPT the value can be between 0 and 2. The higher the temperature, the more randomness will be introduced. This essentially is a dial between factualness and creativeness. If you want ChatGPT to write a song about baseball, set the temperature to 2. If you want it to describe the late great Willie Mays’s basket catch, set it to 1. And if you want to know who won the World Series in 2021, set it to 0. But keep in mind that 0 is not no randomness, it’s just less randomness.

It’s interesting to note that if a model chooses a less than ideal vector, that new term becomes part of its input, leading it to choose the next vector based on that. In other words, once an AI starts going wrong, it will likely get things increasingly wrong. We call this “hallucinating.”

Further note that an LLM can’t distinguish between good and bad, right and wrong, old and new. All of its training data is equally valuable. This means that if a model is trained with dated, vile, and inaccurate data, it will happily give dated, vile, and inaccurate responses to a prompt. Although, to be fair, companies that productize LLMs work hard to prevent this, usually successfully.

By this point you may be wondering what the heck is the point of these things? That’s a good question, and a lot of “very smart people” are wondering the same thing. The technology underpinning LLMs does have some valid and useful use cases, and we’ll discuss those in a future post. But none of those use cases can explain the hype that’s going on right now.

It will be some time before we descend from the peak of inflated expectations to the plateau of productivity. Until then, just keep in mind that an AI engine, even Reggie, is not—repeat, NOT—the all knowing and all seeing Oz. It’s just really really good type-ahead.